Share

ECi Software Solutions, Inc.

Featured Content

View More

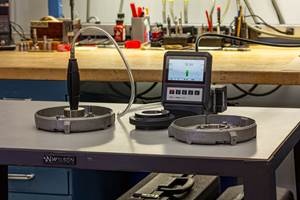

MusashiAI’s automated visual inspection system leverages robotic, optical, edge computing and artificial intelligence technology to produce better results than human visual inspectors in testing. Whereas humans can determine whether a part is good or bad with a high accuracy rate within two seconds, the automated system also performed to high levels of accuracy within less than two seconds. Photos provided by MusashiAI.

Visual inspection is a critical step in the automotive part production process, but it can be a difficult job. Not only is visually examining parts tedious, but it’s also high-pressure work that can be physically challenging. And on top of this, it requires a massive number of people. According to Ran Poliakine, co-founder of automation company MusashiAI, 20% of the employees working in the automotive industry in Japan are visual inspectors, and numbers are similar in some other countries and industries. These employees could be performing more interesting and useful work that’s less physically demanding, but the visual inspection process has proven difficult to automate. However, recent technological developments including optics, edge computing and AI have enabled MusashiAI to develop an automated visual inspection system that can perform as accurately as humans, but faster. And with a new method for teaching the AI, the system can also be set up and redeployed faster than a human can be trained.

In order to understand what this visual inspection system does, Poliakine says it’s helpful to first understand exactly what a human visual inspector does. These employees stand at the end of an automotive production line. They take a finished part from a conveyor and within two seconds, decide if it’s good or defective, and then sort the part into either a “good basket” or a “bad basket.” An effective AI system is one that can accurately make this same snap judgement and reliably perform the same sorting.

Musashi Seimitsu, a Honda subsidiary based in Japan, wanted to shift its visual inspection workforce into jobs that are physically easier and more interesting to do. But it first needed to find an automation system to replace them. So Musashi Seimitsu partnered with SixAI, an Israeli automation and AI company, to form a joint venture, MusashiAI, that could develop a solution.

Developing an Automated Visual Inspector

Visual inspection requires humans to use their hands and arms to grab the part off the conveyor and sort it into the appropriate place, their eyes to see the part, and their brains to know whether the part is good or defective. It was easy for the company to automate the process of grabbing and placing the parts because that technology has been around for years. “Musashi is very good at robotics, so that was not a problem,” Poliakine says.

The next step was to develop an optical system that could mimic the human eye. “The human eye is very, very sophisticated because the eye can focus automatically on the wavelengths that show the defect in the best way,” Poliakine explains. “There is a communication between the brain and the eyes that enables the eyes to adjust to the condition for what they’re looking for.” The company designed a solution that, from one end, behaves like a human eye, and on the other has optimization features like sensors that focus on the specific wavelengths that are best suited to showing defects on parts. For example, it doesn’t look at color, which saves time and money because with traditional RGB cameras, much of an image’s data is devoted to color.

The third step was to develop and train an AI that could identify good and bad parts. This is made possible by edge computing, which is when computer processing and data storage are decoupled from the application as in cloud computing, but closer to the location than the cloud is in order to improve response time and save bandwidth.

Visual inspection can be a tedious, high-pressure and physically straining job for a human. According to Poliakine, this automated system can free up the large numbers of human visual inspectors to perform more interesting and less strenuous work.

Training the AI

Backed by the speed of edge computing, MusashiAI had to devise a way to replicate what happens in a human’s brain while deciding if a part is good or faulty. The company started training an AI to identify good and bad parts via a system of rewards and punishment, punishing the system by making it start from the beginning again if it couldn’t identify parts correctly. However, the company was running into challenges because the system was inspecting forgings. Forged parts can have many types of defects — according to Poliakine, there are at least 27 different families of defects that can be found in forged parts. The sample size would need to be massive in order to find enough parts with each type of defect to teach the AI to correctly identify them. “Their production is very, very high quality from the get-go,” Poliakine adds. It would have taken the company months to go through a sample size large enough to teach the AI all of the different defects, making it difficult to train and impractical to redeploy. In addition, even acceptable forged parts naturally tend to vary so much that it’s impossible to find perfect samples to use. “There could be parts with a lot of small defects in them, but they’re OK,” he says. “So how do you teach that?”

In its search for a new approach, MusashiAI decided that, instead of teaching the AI to identify defects, it would train the AI to identify good parts. “We gave the robot 50 parts that were different, but all of them were good. We taught the algorithm that this is a good sample,” Poliakine explains. “And the algorithm taught itself what the common things were between these parts, and that anything that is not statistically part of this group may have a defect.” Poliakine says this process takes between half a day and a day. Because it only takes a day at most to train the AI to inspect a new part, users can easily re-train it to inspect a different part and deploy it onto a new production line.

An Automated Workforce

According to Poliakine, despite what we believe about human error, human inspectors are actually highly accurate at determining whether a part is good or bad within two seconds. The best automated inspection system prior to MusashiAI’s had a 76% accuracy rate within 26 seconds, making it impractical for use on an automotive production line. MusashiAI’s visual inspector, however, was able to surpass humans in testing. “After we taught the algorithm and used the proper edge computing and optics, we got to over 99% recall (true positive rate) and a very high number for specificity (true negative rate),” he says, “but it was in less than two seconds, which is better than human beings.”

“…we got to over 99% recall (true positive rate) and a very high number for specificity (true negative rate), but it was in less than two seconds, which is better than human beings.”

As MusashiAI was considering how to deploy this system in other factories, it chose to use a Robot-as-a-Service (RaaS) model. Poliakine says that Musashi Seimitsu’s CEO was the one who suggested treating the robots like employees instead of instituting a capital expenditure. This means that instead of customers purchasing the systems outright and amortizing them over a period of time, MusashiAI puts the systems on the shop floor and charges the customer a “salary” for each robot.

Although this system was developed for the automotive industry, it can easily be applied to other industries. Poliakine says the next steps for the company are to expand the visual inspection system across the automotive industry, and then into industries that are similar to automotive — ones with high production rates and high inspection requirements, like medical and aerospace. That is, industries “where you cannot possibly afford to have a failed part,” Poliakine says.

Related Content

Help Operators Understand Sizing Adjustments

Even when CNCs are equipped with automatic post-process gaging systems, there are always a few important adjustments that must be done manually. Don’t take operators understanding these adjustments for granted.

Read MoreHow to Choose the Correct Measuring Tool for Any Application

There are many options to choose from when deciding on a dimensional measurement tool. Consider these application-based factors when selecting a measurement solution.

Read More6 Machine Shop Essentials to Stay Competitive

If you want to streamline production and be competitive in the industry, you will need far more than a standard three-axis CNC mill or two-axis CNC lathe and a few measuring tools.

Read MoreThe Link Between CNC Process Control and Powertrain Warranties

Ever since inventing the touch-trigger probe in 1972, Sir David McMurtry and his company Renishaw have been focused on achieving process control over its own manufacturing operations. That journey has had sweeping consequences for manufacturing at large.

Read MoreRead Next

Can Vision and Artificial Intelligence Make Every Robot Collaborative?

That is the aim of this Boston-area startup. Last year, it came to market with technology to make even fast and powerful industrial robots safe to approach. The technology promises to eliminate the need for guarding around them — safety measures that might not be as safe as you think.

Read MoreVideo: The Impact of Artificial Intelligence (AI) on Manufacturing and Machining

The machine tool monitoring that many CNC machining facilities are doing today could be a first step toward their use of machine learning. In this conversation, MMS’s “Data Matters” columnist Matt Danford speculates on the coming role of AI for refining machining processes.

Read More10 Takeaways on How Artificial Intelligence (AI) Will Influence CNC Machining

The Consortium for Self-Aware Machining and Metrology held a promising first meeting at the University of North Carolina Charlotte. Here are my impressions.

Read More

.png;maxWidth=150)

.jpg;maxWidth=300;quality=90)