Share

Automated manufacturing facilities are full of physical barriers — guarding and fences — because of our fears.

That is, because of our entirely legitimate fears. Automated systems, industrial robots in particular, are capable of moving fast and unexpectedly, imperiling a human who comes too close. A fence is a reasonable precaution. However, Patrick Sobalvarro points out that fences are far from fully effective as safety measures. People are quick and clever, and often too effective at finding workarounds for physical barriers. He looks forward to the day when people can work safely alongside even powerful robots without fences because vision technology and artificial intelligence (AI) provide for greater safety than a physical barrier can deliver.

And the day when this is possible might arrive yet this year.

Dr. Sobalvarro is one of the cofounders of Cambridge, Massachusetts-based Veo Robotics, a company that has been working to develop a collaborative robot or “cobot” system based not on force and speed limitation — typical of cobots in use today — but instead on speed and separation monitoring achieved reliably using AI and advanced vision technology. Veo’s “Freemove” system can make any robot collaborative, he says, providing the robot with the ability to operate close to a person without threat to that person’s safety. In fact, during my visit to Veo’s headquarters, I saw demonstrations involving standard industrial robots from brands I recognize. Existing robot safety standards, including ISO 10218, ISO/TS R1506 and IEC 61508, all recognize this system’s approach to realizing operator safety, and Veo now has a commercial version of Freemove for production facilities on the market.

The system answers what will soon be recognized as a growing need for collaborative automation, he says, a need the most commonly used cobots available today cannot address alone.

Collaborative automation “gives you the option to have a human in the cycle,” he says, and this option—as opposed to full automation—is becoming more valuable. Various trends in manufacturing make full automation increasingly problematic, including mass customization, shorter product cycles and tightening quality demands. “For a growing number of manufactured products, you will never amortize the cost of full, dedicated automation before you have to change the process,” he says. Rather, the option that makes economic sense in these cases is partial, redeployable automation safely operating alongside a human being. Collaborative automation is the way to achieve this.

Yet cobots typically realize this safety in part by operating at speed and force levels below what might cause harm, thereby limiting the maximum payload to perhaps 10 kilograms including the cobot’s end effector. Dr. Sobalvarro points out that only a sliver of industrial robot applications involve loads this light. Veo’s system aims to allow robots that are fast and powerful enough to seriously injure a human to be controlled so as to become collaborative as well.

The vision technology enabling this possibility arrived only within the last five years, he says. One of its earliest uses was video games. Safety is no game, of course, but the motion-tracking vision capability used by Veo is an industrial, longer-range version of the kind of motion sensing used by the Xbox Kinect game accessory.

Observing Occlusions

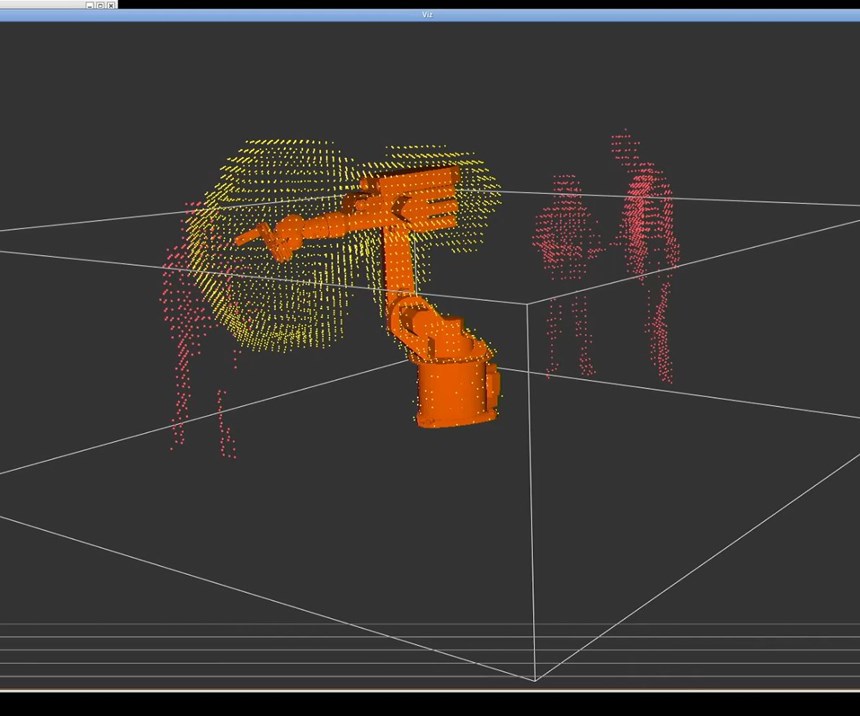

Dr. Sobalvarro explains how the system relying on this sensing technology works. Veo’s collaborative automation employs four or more vision cameras mounted high above the robot that have overlapping view fields. Each camera repeatedly sends infrared flashes to map every object in its field of view by means of the IR reflection. The resolution of the cameras developed by Veo is fine enough to distinguish a human finger at 10 meters. Thirty times per second, the collaborative system maps the vicinity of the robot by combining all the cameras’ data. Within this combined field, he says, the system is looking for occlusions.

That is, the system is looking for every volume of space that it cannot see because something is blocking its view. Any occlusion a little larger than a loaf of bread, or bigger, is assumed to be able to contain a human (meaningpotentially a very small human), and analysis of these occlusions is used to detect safety concerns. Phase transitions logged through the 30 samples per second track the progress of any occlusion. If the course of movement of an occlusion can provide clearance for a human to have entered the work zone and come within range of the robot, then the space is considered “infected” by the occlusion and the system responds accordingly. From this occlusion’s current position, the Freemove software then compares future states based on possible moves of the robot and possible moves of the suspected human at a presumed (fast) speed of 2 meters per second. If the event horizons of these two actors lead to any possible state in which the human comes in contact with the robot, then the robot is slowed down or brought to a stop.

Crucially, the system employs AI, but not machine learning, Dr. Sobalvarro says. Machine learning is probabilistic, where the relevant safety standards make clear no statistical approaches for triggering safety measures are allowed. “Even very good machine learning could have one fail in 10,000, which is too large where human safety in a manufacturing environment is concerned,” he says. The AI instead entails rapid computation for occlusion analysis combined with classification according to model recognition.

The latter component, classification, makes the occlusion analysis more efficient. From the vision data, the system is equipped to identify the robot, fixturing and workpiece, ruling them out as suspect occlusions. This classification also helps make the system quick to install—a valuable side benefit. No precise locating of cameras is required, because once the cameras are mounted, the system can “zero” itself by locating the base of the robot.

Human Motives

Why is this artificial intelligence system safer than a fence? Because fences are vulnerable to human intelligence, Dr. Sobalvarro says. Humans follow conflicting motives that put them in danger. “The career of a person working in manufacturing involves a lot of training in safety, but it involves even more training in how important it is to keep production moving,” he says. In an instant, these two priorities can come in conflict. The worker who dropped an item that fell behind the safety fence might resist interrupting production just to retrieve that item. That worker might see how to compromise or move over or under the fence—a move made in an instant that might prove injurious or deadly. By contrast, there is no similar way to defeat the vision and AI system with just a single moment’s poor impulse. If the worker tried to do so by covering the camera, then this would produce an occlusion that would lead to a response from the system. With the vision and AI system, there just isn’t a fence that could be jumped.

There have been non-contact systems before for speed and separation monitoring of robots. Light curtains, for example. However, devices such as these can only be conservative, keeping the barrier of triggering far away from the robot’s reach. They don’t allow for collaboration, for the human and robot easily working together in close proximity so that the automation does not have to accomplish every detail.

Indeed, Dr. Sobalvarro notes there is nothing about the Veo system that suits it specifically to a robot. The ultimate hope of the company is that any automated industrial machinery might be governed by a system such as this, producing unguarded factory floors through which people can move freely, in safety and without fear.

Related Content

The Power of Practical Demonstrations and Projects

Practical work has served Bridgerland Technical College both in preparing its current students for manufacturing jobs and in appealing to new generations of potential machinists.

Read MoreAddressing the Manufacturing Labor Shortage Needs to Start Here

Student-run businesses focused on technical training for the trades are taking root across the U.S. Can we — should we — leverage their regional successes into a nationwide platform?

Read MoreFinding the Right Tools for a Turning Shop

Xcelicut is a startup shop that has grown thanks to the right machines, cutting tools, grants and other resources.

Read MoreDN Solutions Responds to Labor Shortages, Reshoring, the Automotive Industry and More

At its first in-person DIMF since 2019, DN Solutions showcased a range of new technologies, from automation to machine tools to software. President WJ Kim explains how these products are responses to changes within the company and the manufacturing industry as a whole.

Read MoreRead Next

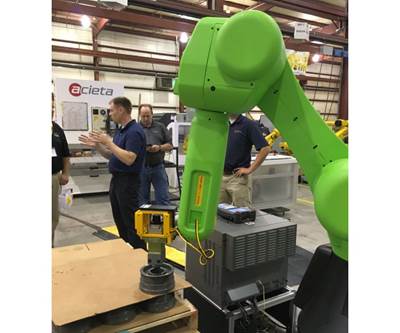

Acieta Event Explores the Use and Challenges of Collaborative Robots

Speakers describe some of the considerations relevant to the category of robots that are safe for operation near people.

Read MoreRegistration Now Open for the Precision Machining Technology Show (PMTS) 2025

The precision machining industry’s premier event returns to Cleveland, OH, April 1-3.

Read MoreSetting Up the Building Blocks for a Digital Factory

Woodward Inc. spent over a year developing an API to connect machines to its digital factory. Caron Engineering’s MiConnect has cut most of this process while also granting the shop greater access to machine information.

Read More