Breaking SPC Limitations Without Breaking Rules

Machine learning helps prove the merit of statistical analysis techniques that do not require plotting CNC machining quality data on a bell curve.

Nobody likes a dusty tabletop. Ideally, there is no dust on the table at all. At some point, however, the table cannot get any cleaner. Considering the dusting anything but a job well done would be neurotic. And yet, if this dusting were subject to the same quality control standards as many manufacturers’ CNC machining processes, even the most thorough cleaning might be deemed insufficient.

This is largely due to the limitations of statistical process control (SPC) rules, says Tom Stewart, president at data analysis software developer Q-DAS. Despite these limitations, nearly all major OEMs employ SPC to determine what quality reports from parts suppliers should look like, and they do so for good reason. Evolving from Walter Shewhart’s first control charts in the early 1920s, SPC has long been the most effective means of ensuring mass produced parts are worthy of the automobiles and aircraft that we routinely and unthinkingly trust with our lives. Nonetheless, quality requirements for machined parts often amount to the equivalent of expecting there to be less than zero dust on the table — an impossible condition. As a result, suppliers must expend time and resources not making parts, but massaging numbers to the point that the data no longer represents the reality of the process. Meanwhile, both suppliers and their customers miss opportunities to improve and reduce the overall cost of bringing products to market.

Traditional SPC analysis is ill-suited for CNC machining because it assumes all data is normally distributed, Mr. Stewart says. Normally distributed data forms a bell curve in which most points cluster around the mean, with progressively fewer falling near or outside the markers for standard deviation on either side. Returning to Mr. Stewart’s analogy of a dusty table, measurements of dust thickness would all lie on the right side of the curve. After all, there cannot be less than zero dust on the table. However, traditional SPC analysis of this skewed dataset could lead to the conclusion that the process is inadequate or in need of improvement, even if the table is consistently clean. From surface finish to tool wear, the same essential concept applies to most measurements associated with precision machining.

Nonetheless, OEMs often see no other option than to demand that all quality data be evaluated as if it were normally distributed. The alternative — doing the math required to properly evaluate differing data distributions — would be a herculean task, Mr. Stewart says. A typical engine block presents thousands of machined features to evaluate, many of which are associated with multiple data points. For instance, a hole might require calculating diameter, true position and profile. “That’s three different GD&T (geometric dimensioning and tolerancing) call-outs, which could result in three different, non-normal distribution models, which could be analyzed with maybe 12 different mathematical factors,” he says. “Instead, OEMs want to keep it simple. Suppliers are forced to transform the data to a normal pattern prior to calculating the required quality indices. This takes time, and when the data model changes, it no longer represents the process.”

He adds that the problem is widespread enough to have given rise to the phrase “show program for customer,” a satirical reference to SPC as a means of presenting data in the preferred format as opposed to a serious tool for evaluating quality or capability. For example, say a tool change leads to concentricity problems in machined holes. “If you take the data as is, you’ll be able to see that process change in a histogram,” he explains. In contrast, he says transforming the data to normal could “mathematically erase” that shift. “The numerics might be fine, but if you look at the raw data, you can clearly see that there is a problem. In other cases, you might get poor numbers but great results.”

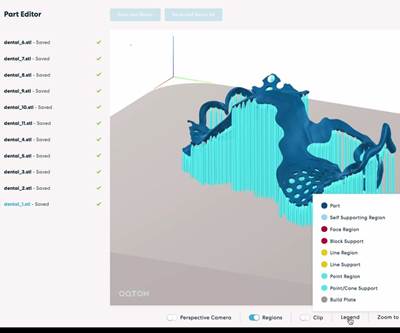

Since its founding in 1988, Q-DAS has built its business in large part on solving this “dust on the table” problem (the company is now part of Hexagon Manufacturing Intelligence). Its SPC software supports virtually every analysis technique permitted by ISO and other standards. It also supports OEM-specific reporting rules (a basic example is one customer requiring process capability (Cpk) calculations while another requires process performance (Pp) or process performance index (Ppk) numbers). This makes switching to different quality reporting standards simple.

Just as importantly, the software can determine the best-fitting methods for performing the necessary calculations without the confusion of an artificially imposed normal distribution. Even if the application does not permit that method, the ability to compare one data model to another makes the software as much of an education tool as a means of easily generating control charts and other complex quality documentation.“Suppliers can look customers in the eye and say, ‘We did what you asked us to do, and here are the results, but we’d also like to show you another way of looking at things that we think makes a lot more sense,’” Mr. Stewart explains.

The addition of machine learning will make conveying this message easier, he says. Fed with enough data over time, learning algorithms will perform much of the heavy mental lifting required to correlate process variations that might otherwise seem unrelated or insignificant. The benefits of the right analysis techniques will be easier to identify.

As an example, he cites a gun-drilled automotive shaft that becomes warped during heat treat, prompting an investigation into possible causes. Armed with analysis techniques that do not distort the reality of the process, a Q-DAS user could trace the warpage to extra heat from chip-clearing difficulties during the gun-drilling process. However, this requires time and effort. In contrast, machine learning algorithms will make the necessary correlations automatically. Expected to be field-tested within a year, such capability makes SPC more effective not only as an analysis tool, but also as a facilitator of intelligent conversation about issues like "the dust on the table.”

Related Content

Manufacturer, Integrator, Software Developer: Wolfram Manufacturing is a Triple Threat

Wolfram Manufacturing showcased its new facility, which houses its machine shop along with space for its work as a provider of its own machine monitoring software and as an integrator for Caron Engineering.

Read MoreShop Moves to Aerospace Machining With Help From ERP

Coastal Machine is an oil and gas shop that pivoted to aerospace manufacturing with the help of an ERP system that made the certification process simple.

Read MoreCan Connecting ERP to Machine Tool Monitoring Address the Workforce Challenge?

It can if RFID tags are added. Here is how this startup sees a local Internet of Things aiding CNC machine shops.

Read MoreMachine Monitoring Boosts Aerospace Manufacturer's Utilization

Once it had a bird’s eye view of various data points across its shops, this aerospace manufacturer raised its utilization by 27% in nine months.

Read MoreRead Next

Machining in a Measured Future

Today’s most exclusive work serves as a proving ground for the coming era of more stringent specifications, less paper and more comprehensive quality control in general.

Read MoreAI Makes Shop Networks Count

AI assistance in drawing insights from data could help CNC machine shops and additive manufacturing operations move beyond machine monitoring.

Read MoreRegistration Now Open for the Precision Machining Technology Show (PMTS) 2025

The precision machining industry’s premier event returns to Cleveland, OH, April 1-3.

Read More

.jpg;maxWidth=300;quality=90)

.jpg;maxWidth=300;quality=90)